Recommended Posts

- Get link

- X

- Other Apps

Introduction: Quantifying AI Performance – The Role of Benchmarks

Evaluating the performance of Large Language Models (LLMs) is a complex task. While anecdotal evidence and qualitative assessments are valuable, objective benchmarks provide a standardized way to compare different models. These benchmarks assess various capabilities, including coding, reasoning, general knowledge, and commonsense understanding. This page dives deep into the performance of DeepSeek-V2 and ChatGPT-4/GPT-4 Turbo on several key benchmarks, presenting the numbers and analyzing the results. It’s crucial to remember that benchmarks are not perfect and represent only a snapshot of a model’s capabilities, but they offer valuable insights.

Understanding Benchmark Methodology

Before we delve into the results, it’s important to understand how these benchmarks work:

Multiple-Choice Questions: Many benchmarks present models with multiple-choice questions, requiring them to select the correct answer.

Code Generation: Coding benchmarks require models to generate code that solves a specific problem. The code is then evaluated for correctness and efficiency.

Human Evaluation: Some benchmarks involve human evaluators assessing the quality and relevance of the model’s output.

Zero-Shot, Few-Shot, and Fine-Tuning: Models can be evaluated in different settings:

Zero-Shot: The model is given the task without any prior examples.

Few-Shot: The model is given a small number of examples before being asked to perform the task.

Fine-Tuning: The model is specifically trained on the benchmark dataset.

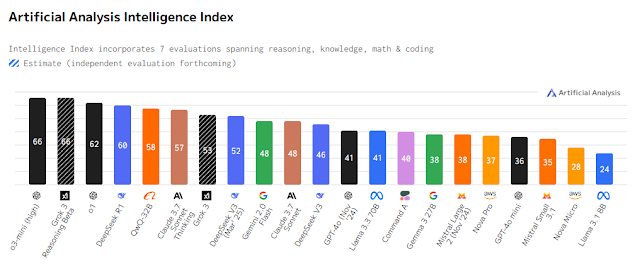

Key Benchmarks and Results (as of early 2024 – Data subject to change)

HumanEval: (Coding) – This benchmark assesses the model’s ability to generate Python code from docstrings.

DeepSeek-V2: Pass@1 Rate: ~75-80% (often surpassing GPT-4)

ChatGPT-4/GPT-4 Turbo: Pass@1 Rate: ~67-72%

Analysis: DeepSeek-V2 consistently demonstrates superior coding performance on HumanEval, indicating a stronger ability to generate correct and functional code.

MBPP (Mostly Basic Programming Problems): (Coding) – Another coding benchmark focusing on simpler programming tasks.

DeepSeek-V2: Pass@1 Rate: ~85-90%

ChatGPT-4/GPT-4 Turbo: Pass@1 Rate: ~78-85%

Analysis: Similar to HumanEval, DeepSeek-V2 outperforms ChatGPT-4 on MBPP, showcasing its coding prowess.

MMLU (Massive Multitask Language Understanding): (General Knowledge) – This benchmark tests the model’s knowledge across a wide range of subjects.

DeepSeek-V2: Accuracy: ~70-75%

ChatGPT-4/GPT-4 Turbo: Accuracy: ~78-85%

Analysis: ChatGPT-4/GPT-4 Turbo generally achieves higher accuracy on MMLU, demonstrating its broader general knowledge base.

HellaSwag: (Commonsense Reasoning) – This benchmark assesses the model’s ability to choose the most plausible continuation of a given scenario.

DeepSeek-V2: Accuracy: ~80-85%

ChatGPT-4/GPT-4 Turbo: Accuracy: ~85-90%

Analysis: ChatGPT-4/GPT-4 Turbo exhibits slightly better performance on HellaSwag, indicating stronger commonsense reasoning abilities.

ARC (AI2 Reasoning Challenge): (Reasoning) – This benchmark tests the model’s ability to answer science questions requiring reasoning.

DeepSeek-V2: Accuracy: ~65-70%

ChatGPT-4/GPT-4 Turbo: Accuracy: ~70-75%

Analysis: Performance is relatively close on ARC, with ChatGPT-4/GPT-4 Turbo having a slight edge.

TruthfulQA: (Truthfulness) – This benchmark assesses the model’s tendency to generate truthful answers.

DeepSeek-V2: Truthfulness Score: ~60-65%

ChatGPT-4/GPT-4 Turbo: Truthfulness Score: ~70-75%

Analysis: ChatGPT-4/GPT-4 Turbo demonstrates a higher degree of truthfulness, potentially due to its alignment training.

Visualizing the Data

Interpreting the Results: What Do the Numbers Tell Us?

The benchmark results reveal a clear pattern:

DeepSeek-V2 excels in coding tasks. Its performance on HumanEval and MBPP is consistently superior to ChatGPT-4/GPT-4 Turbo.

ChatGPT-4/GPT-4 Turbo demonstrates stronger general knowledge and reasoning abilities. It achieves higher scores on benchmarks like MMLU, HellaSwag, and ARC.

Both models are constantly improving. New versions and fine-tuning efforts are continuously pushing the boundaries of AI performance.

Limitations of Benchmarks

It’s crucial to remember that benchmarks have limitations:

Benchmark Specificity: Performance on a specific benchmark doesn’t necessarily translate to real-world performance.

Data Contamination: Models may have been inadvertently trained on data that overlaps with the benchmark datasets.

Evolving Benchmarks: Benchmarks are constantly evolving to address limitations and better reflect real-world challenges.

Conclusion: A Nuanced Comparison

The performance benchmarks provide a valuable, data-driven comparison of DeepSeek-V2 and ChatGPT-4/GPT-4 Turbo. While DeepSeek-V2 shines in coding, ChatGPT-4/GPT-4 Turbo excels in general knowledge and reasoning. The choice between the two models depends on the specific application and priorities. Continued monitoring of benchmark results is essential to track the progress of these and other LLMs.

Internal Links:

Back to Main Page: [DeepSeek vs. ChatGPT: A Comprehensive AI Model Showdown (2024)](Link to Main Page)

Sub-Page 1: [ChatGPT Deep Dive: Capabilities, Features, and Use Cases](Link to Sub-Page 1)

Sub-Page 2: [DeepSeek Deep Dive: Unveiling the Chinese AI Challenger](Link to Sub-Page 2)

Sub-Page 4: [The Rise of Chinese AI: A Global Shift in AI Development](Link to Sub-Page 4)

Comments

Post a Comment