Recommended Posts

- Get link

- X

- Other Apps

List

- 2-1 Let's prepare the environment to use Stable Diffusion

- 2-2 Let's build the environment using Google Colab

- 2-3 Let's build the Stability Matrix in the local environment

- 2-4 Let's create images with simple words

- 2-5 Download the model

- 2-6 Download the VAE

- 4-1 You can do it with img2img Let's figure out what's there

- 4-2 Let's create an image using Sketch

- 4-3 Let's edit an image using Inpaint

- 4-4 Apply Inpaint to modify an image

- 4-5 Extend an image using Outpainting

- 4-6 Increase the resolution of an image using img2img

- 4-7 Let's upscale with the extension function

- 6-1 Let's learn what we can do with additional learning

- 6-2 Let's create an image using LoRA

- 6-3 Create your own dedicated painting style LoRA

- 6-4 Let's create various types of LoRA

- 6-5 Let's evaluate the learning content

Creating an Image with ControlNet in Stable Diffusion WebUI

Once you have ControlNet installed and the necessary models downloaded, here's the typical workflow for generating an image using it:

- Open your Stable Diffusion WebUI in your web browser.

- Navigate to the "ControlNet" tab. You should see this tab next to the usual "txt2img" and "img2img" tabs.

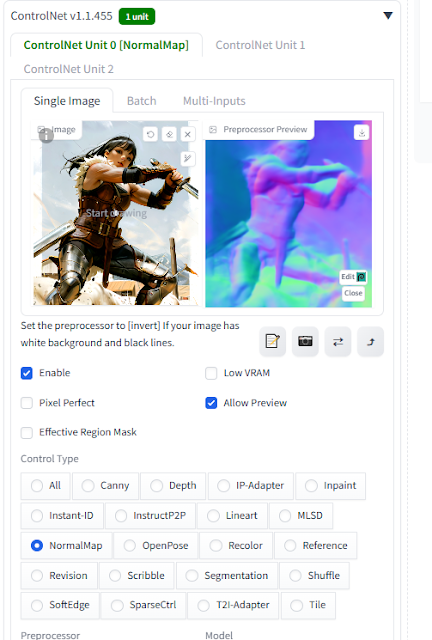

- Enable ControlNet: At the top of the ControlNet interface, there's a checkbox labeled "Enable." Make sure this box is checked to activate ControlNet for your generation.

- Upload your Control Image:

- Locate the "Control Image" section.

- You can either drag and drop an image into the designated area or click the "upload" button to browse your files and select an image.

- This image will serve as the spatial guidance for ControlNet.

- Select the Control Type:

- Below the "Control Image" section, you'll find a dropdown menu labeled "Control Type."

- This menu lists various ControlNet modes, each designed to extract different types of spatial information from your control image. Common options include:

- Canny: Detects edges in the image.

- Hough: Detects straight lines.

- SD Scribble: Interprets rough sketches.

- Depth: Estimates the depth of objects.

- NormalMap: Creates a surface normal map.

- Segmentation: Uses semantic masks to guide generation.

- OpenPose: Detects human or animal poses.

- MLSD: Detects robust line segments.

- Tile: Useful for enhancing details and resolution.

- IP-Adapter: Transfers style and content from an image.

- Choose the Control Type that best suits the kind of spatial control you want to exert based on your control image.

- Select the Preprocessor (Optional but Often Necessary):

- Depending on the chosen "Control Type," you might need to select a "Preprocessor."

- The preprocessor analyzes your control image and extracts the relevant information (e.g., runs the Canny edge detection algorithm).

- The available preprocessors will vary based on the selected "Control Type."

- Some "Control Types" have an "none" preprocessor option if you've already processed your image externally or if the ControlNet model directly accepts the input.

- Click the dropdown menu next to "Preprocessor" and select the appropriate option. You might also see a "Guess mode" which can sometimes automatically select a suitable preprocessor.

- Select the ControlNet Model:

- Below the "Preprocessor" dropdown, you'll find a dropdown menu labeled "Model."

- This menu lists the ControlNet model weights you have downloaded.

- Choose the ControlNet model that corresponds to your selected "Control Type" (e.g., control_canny.safetensors for Canny).

- If the dropdown is empty, it means the model weights are not in the correct directory, and you'll need to follow the installation instructions again.

- Adjust ControlNet Parameters (If Necessary):

- Some ControlNet modes have adjustable parameters that can influence how the control signal is applied. These might appear below the model selection.

- For example, with Canny, you might be able to adjust the threshold values. Experiment with these settings to fine-tune the control.

- Go to the "txt2img" or "img2img" tab:

- ControlNet works in conjunction with the standard text-to-image or image-to-image generation processes.

- Navigate to the tab where you want to generate your final image.

- Enter your Text Prompt:

- In the prompt box, describe the image you want to generate. Be as specific as needed. ControlNet will help guide the spatial arrangement of the elements described in your prompt.

- (Optional) Configure "img2img" Settings:

- If you are using the "img2img" tab, upload your initial image and adjust parameters like "Denoising strength" to control how much the generated image deviates from the original.

- Adjust Generation Parameters:

- Set your desired image size, sampling steps, CFG scale, and batch count as you normally would for Stable Diffusion.

- Ensure ControlNet is Enabled in the Generation Settings:

- In the "txt2img" or "img2img" tab, scroll down to the "ControlNet" section. You should see a preview of your uploaded control image and the selected ControlNet settings.

- Make sure the "Enable" checkbox within this section is still checked. You can also adjust the "Control Mode" here:

- Balanced: Attempts to satisfy both the prompt and the control.

- My prompt is more important: Gives more weight to the text prompt.

- ControlNet is more important: Gives more weight to the control signal.

- You can also adjust the "Control Weight" to increase or decrease the influence of ControlNet. A weight of 1 is the default.

- Click the "Generate" button:

- Stable Diffusion will now generate an image that attempts to match both your text prompt and the spatial structure derived from your control image by ControlNet.

- Review and Adjust:

- Examine the generated image. If it's not quite what you were looking for, you can:

- Adjust your text prompt.

- Try a different "Control Type" or "Preprocessor."

- Modify the ControlNet parameters.

- Change the "Control Mode" or "Control Weight."

- Experiment with different sampling settings.

- Examine the generated image. If it's not quite what you were looking for, you can:

By following these steps, you can leverage the power of ControlNet to gain precise control over the spatial composition of your AI-generated images within the Stable Diffusion WebUI. Remember that experimentation is key to discovering the best settings for your specific needs.

- Get link

- X

- Other Apps

Comments

Post a Comment